The central website running Arisia '21 was a monumental undertaking, and the

people behind it have a lot of my respect [and sympathy] for pulling off

a complex event in what was ultimately a smooth and integrated way.

Sure, there were some missing pieces and a few hiccups, but you likely won't

find any online endeavor on this scale entirely free of any of that.

As Justin, one lead of the "Remote" design team, put it in his own

post-con summary:

Most components of that were a swirling cloud of buzzwords from my backward view -- Scala, Angular, Play framework, Typescript -- and personally I had only recently smelled PHP and Python from a distance, but hadn't really got into working with them much. Not to mention that I had only started poking around Github maybe a month beforehand. There wasn't really time enough for me to come up to speed on any of those things to usefully help. However, I fell into my usual patterns when it came to evaluating the work product, particularly from the standpoint of resource containment. Websites frequently do pull components from third-party repositories and content-distribution networks, partially for speed and partially for code that isn't maintained in-house but serves some desired function. This has its downsides too, such as implicitly extending one's security perimeter out to the practices and competence of those third parties, and some degree of sketchiness certainly exists in those areas. This is why it's *my* standard practice to note such things and at a minimum, ask content providers "hmm, did you really want to do that". |

|

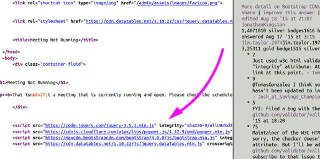

So once I finally got onto the convention website, my first instinct was to look at some page source and immediately got that "oh no" reaction from seeing some very typical third-party callouts. But at the same time I noticed something I hadn't before: the "integrity" argument, which is more properly called a subresource, and is part of the cross-origin resource sharing spec, intended to at least sanity-check what a CDN hands out. Except that it's up to the local browser to actually check that, so it's more overhead on the client side if it even supports the mechanism. And where is one supposed to gather those "verified" hashes in the first place? As part of a still-experimental API at the time, it wasn't going to matter much, but more importantly there wasn't time to try and pull vetted copies of all of these resources in-house and serve them directly. If online.arisia.org was a bank or medical portal or tax-payment gateway or anything dealing in critical personal data, that would be a different story. But it wasn't, and about 0.1% of the user base actually notices this sort of thing, so we plunged onward. |

| One of my Zoom-hosting slots was for running a webinar, and confused reports had already come back into the tech-ops channel from someone else who also had a webinar and couldn't find the typical "start broadcast" or "go live" button. Because there wasn't one. The webinars were apparently launching out of the backend without the "practice session" enabled, which was the complete opposite to the workflow that several of us had been doing while hand-launching webinars in ALL of our prior events. The sessions started "hot" to the world, and simply relied on attendees not having the join link until about five minutes before start time. |

|

I found out later that it wasn't entirely Justin's omission; the Zoom API reference describes some fairly stupid defaults for webinar creation, and implies that the overall settings that one laboriously grinds through at the web portal don't even come into play when launching via API. Way to go, Zoom, gratuitously throw off your subscribers' workflows just because they were trying to do something a little more elegant. |

|

Overall, though, the site did a great job of pulling together lots of

critical information in one go-to place, making it pretty easy for everyone

to find what they needed quickly.

In less than a third the time that the project should have taken, the

Remote folks spun up their magic and got it together for the good of the

community, and for that they should be rightly proud.

Never mind that a few bits might have been missing or stubbed off here

and there; the audience doesn't see what the audience doesn't see.

The numerous accolades that rolled in afterward speak for themselves.

This leaves a real dilemma to ponder, to weigh the merits of either a> wanting to carry stuff like this forward and turn it into something really stellar for next year, versus b> desperately wanting to never have to do this again and to simply get back to normal. Thought trends seem to point toward future events having significantly more online presence regardless, but until people start fully realizing the work factor involved in "going hybrid" that may only be lofty fantasy so far. |