|

In August 2021 I helped out with Readercon, another smallish Boston-local

SF con that specifically concentrates on literature, authors, writing, and

publishing.

It had not only gone virtual but also got pushed back a month, just due to the

difficulties in getting people to coordinate and populate the program.

Tech preparation had already begun reasonably well in advance, at least on

the pieces I had a hand in, leaving a fairly minimal amount of ramp-up closer

in to the con.

This has roughly four sections:

Readercon has a website -- a brand new one, in fact, but here it was only used for registration and serving out the public program schedule and various static documents. A somewhat risky decision was to base the live event itself heavily around Discord, which has some likelihood of annoying people who dislike and/or can't navigate it well. There was an existing Readercon community in place on Discord already, but that structure wasn't really organized well for a convention, so rather than uproot that whole thing, a completely new event server would be built from the ground up. With my experiences from Arisia and prior cons, I threw my hat in to start and manage that process. Perhaps this would be an opportunity to engage people more, and show Discord in a better light as an acceptable base to build on. As I continued experimenting and building parts of the platform, I became more convinced that we would accomplish that. I sent this off to our wider tech group, reflecting on development and comparing it to the different approaches we'd seen over the past year. |

Date: Fri, 30 Jul 2021 00:37:18 -0500 Subject: paced info-gating So as I continue working on Readercon stuff, I'm coming to realize that it's basically doing what I kind of envisioned as a workable model for online events in general. In effect, it's all being interactively run out of a Discord server. Many of the "sessions" are actually just prerecorded videos, the URLS for which are given to people at the start of a particular time block, and they play them independently at roughly the same time and then comment and chat in a corresponding Discord text channel. The author/source/talent may or may not be there to respond, that will vary. Other sessions are live via Zoom, but only with the panelists in the meeting and the whole thing live- streamed out to Youtube which is where the audience watches. It's Youtube, but the videos and streams are all unlisted and ad-free -- a totally doable way to present content without impediments, annoyances, or security leakage. The salient point is that there's presently no website or Konopas or other facility where all the *links* are publicly listed, or if there is I haven't been pointed to it. The main way that people receive pointers to the goodies is through automated announcements in the Discord when each session approaches, which contain clickable links that take them off to the streams. And I seem to be the one writing the bot to handle that. I have a data file of all the sessions, or will have a complete one by the time the con starts, and a little nest of the usual grodey shell scripts to handle timed delivery of upcoming session data at the right times. Sure, all this could be done manually by con staff, but having a uniform delivery template with some nice markdown and formatting lends an authoritative air of consistency to the whole thing. External injection is handled by some magic URLs called "webhooks", which are basically just obscure endpoints that a blob of JSON gets posted to and poof, it magically shows up in a particular channel that the webhook is bound to. So once I have the relevant data and the session is imminent, it gets plugged into a template and fired off through "curl". The con Discord server itself is probably one of the most locked-down, but also most open-feeling ones that you'll find out there -- full entry is not just the typical checkbox we see on most of them, but an obscure-looking magic string that incoming attendees have to paste into a channel before getting normal attendee access. These strings are generated when they pay for the con, or are known staffers and volunteers. I only did the backend and bot support for that part, the integration at the readercon.org website was handled by others who know how to speak Drupal. At this point we've got over a dozen fun little bot functions, from the amusing to the administrative, and that's been primarily my bailiwick to set up. So besides a static general schedule and other info available on a website, you could totally run the live mechanics of an online con out of Discord, using it to on-the-fly transmit stream links, Zoom sessions, and any other info. Some of our items are contained totally within Discord itself, such as the Kaffeklatsches, and there's some fanciness there to limit signups and grant access to the right people at the right times just like in real life. Today I also got dragged into the crew to help with re-captioning a pile of auto-captioned Youtube videos, which is mind-numbingly tedious work but really should get done to eliminate the often egregious AI-captioning errors. Despite that, Readercon could desperately use more help on that front, so if you're at all familiar with Youtube Studio or want to learn, even grinding through *one* video to make it present better could help. Most of what's needed is on relatively short readings, 20 - 25 minutes each. I believe that sending offers of assistance to conchair@readercon.org would get to the people who could set you up in their editing environment. Time is short, and there's a lot to do. ------------------------------

|

Only half of the event tracks were live panels, but dropping the info for

those and the pre-recorded static stuff at the right times lent a highly

interactive feel to the whole thing.

People would "gather" on the related text channels of interest and use that

just like the chat and Q&A facilities of Zoom, asking questions and getting

responses from authors and panelists, and they were clearly having a great

time with it.

Many folks seemed to be adapting well to the Discord environment, using more

of the features such as message replies, markdown, and emojis as the weekend

went on.

We had a few fun extra items such as restricted-access spaces and some

typical Discord role-selection menus, although it was a

bit of a struggle

working out what to offer there.

The small Kaffeeklatsch gatherings ran entirely in Discord's audio/video

channel environment, as did a couple of public con-suite social channels,

and other than a couple of access-management oopses, for the most part it

all seemed to hold up just fine.

Here is a brief text dump of the Discord channel stack. I took a template snapshot near the end of the con and stood up a skeleton server from it, which can spin off further templates as a starting point for building another con server. The storage resources within Discord for keeping an empty server structure around are super-minimal; a dump in JSON/ASCII format with all the roles and permissions captured is less than 100K. The next section illustrates miscellaneous other tech aspects of the con, mostly about what the "announce bot" looked like to attendees at runtime. There's also a small rundown on Youtube streaming setup that I added later, based on summarizing my notes for a later event. |

|

The bot also incorporated the equivalent of a green-room annunciator, into the #green-room channel, to help get panelists moving toward their prep sessions well in advance of go-live time. The live tracks ran as back-to-back hour blocks, necessitating the "interleaved Zoom" approach where two different meetings would overlap for up to a half hour while panelists were found, brought in, had their sound and lighting checked, tested screenshares, talked about topics and flow, etc. The usual stuff that we do for them as hosts. |

|

The major difference this time was adding the livestream launch, which caused

a little confusion Friday night as not all of our hosts had

practiced up as instructed

beforehand, but by Saturday we all had it down fairly smooth and starting

on time.

Streaming also adds that security-enhancing one-way layer between the

panelists and the audience, without the cost overhead of Zoom webinars, but

having the interactive chat right there along with it, even if it wasn't

Zoom chat, made it feel far less isolating to the audience.

[If timestamps seem a little wonky in this, it's because my machines stay on standard time, whereas the con ran on EDT. Thus, the bot had to do a "double conversion" from my time to theirs around the next-hour marks -- thus, I was NOT spamming this an hour too early.] | |

|

To be fair, there are other ways to do the "link drop" strategy to pace

out the session information people need, but many of them would involve

reloading web pages or some other non-obvious or cumbersome process.

Because Discord is so event-driven and interactive, it and the announce-bot

basically held the con runtime together in what I thought was a fairly

seamless way.

I think I can take a little personal pride in that, and think about ways

to improve it.

And dumping new messages into channels isn't the only way to utilize Discord, either. Webhooks can also overwrite existing messages, so it is entirely possible to have a separate place where information could get updated on the fly within a small group of messages. See this example of a simple handler script, using an existing message ID and the PATCH HTTP method instead of POST. That's just how the webhook API works. The downside is that previous information would be lost, unless some other means were used to preserve it elsewhere. Mid-con, the convention program folks actually did start gathering links as they emerged and added them to a "past sessions" channel, to serve as a unified index for anyone who came back later looking for specific items -- a bit of manual process, perhaps, but having that beat trying to search across voluminous channel chat for the original bot drops. |

Date: Mon, 16 Aug 2021 21:46:03 -0500 Subject: Readercon mini-wrapup Plenty of skepticism may exist around running a con out of Discord, but I have to say it all worked out pretty well last weekend. My grodey-shell- script announce-bot faithfully delivered titles and Youtube links into the channels at the appointed times, requiring only minimal fiddling of when, and chat in the track channels clearly indicated that those present were eagerly waiting for the "bot link drop". A minor change was to announce the livestreamed tracks a little earlier since start time was in the hands of the Zoom host, and then the canned pre-recordings a little later. In between, those who had signed up for particular Kaffeeklatsches not only received a reminder in the companion Klatsch text channel, but were indeed SUMMONED there by @pinging their role for the slot, essentially their precious ticket to the room. At least two Klatsches were full capacity, and other than the occasional author messing around with the signup-menu themselves and accidently *losing* their own preset role, it all ran pretty smoothly. Per-track reminders [in part to indicate that chatter unrelated to the present hour should move to hallway channels] were delivered about five minutes in, and I changed their text presentation at some point to print the clickable Youtube links in somewhat larger text because someone complained about the "embed" format being too small. But the embeds were super-distinctive to help set visual boundaries around the sessions, with bright colors and the standard red Youtube logo as a custom emoji. In a way, far more convenient than having to switch back to a schedule or a Konopas and find a link, click it, then flip back to Discord to interact. Besides, we wanted to treat *pre-existing* videos like they were readings or talks given at particular times, so the first knowledge the attendees ever got of the links came from the bot at the scheduled time. Of course they could then always note them down and watch later too, and I'm sure a lot of inter-track timeshifting went on as everything's still up for grabs. Live tracks were done with paired/interlaced Zoom accounts, using a single recurring meeting per, and only with hosts and panelists in it. This made scheduling super-easy on the Zoom side; panelists would have one of four join links. Separate sessions were set up in the Youtube streaming, but all set as "unpublished" so people couldn't just look at the Readercon channel and jump the gun on sessions or static playbacks. Believe it or not, as a solution this was good, fast, *and* cheap. Basically, the expense there was a couple of months of Zoom meeting licenses. We had occasional startup difficulties around streaming out of Zoom, but I think the majority of those were copy/paste errors for streamkeys. [Lesson: don't try to grab text out of a *cell* in a GSheet, click the cell and then copy from the formula box.] This streaming turns out to be fundamentally far simpler than I thought -- a single stream key corresponds to a single Youtube viewing URL, and that's it, as long as the stream is set up right. Start sending from Zoom, the stream goes hot. If it's not set to auto-terminate, it will go "offline" when the Zoom ceases sending and stick around, waiting for more data, for many hours before finally dropping. One problem, however, was resolution. Everything seems to have emerged in 720p, which is fine for a panel but not other purposes. I'm a bit baffled because on one of my own tests with some full-screen shares, the video seemed to be emerging at 1080 with everything nice and crisp on the Youtube side, but then the next night when I went to seriously record a session it seemed stuck at 720 and never auto-adjusted upward. I probably screwed up when creating that second streamkey, as resolution appears bound to *that* for whatever reason. Or Zoom was simply refusing to send my native screen 1080 due to some unknown factor. On Saturday afternoon I had given a screenshared "engine room tour" in Discord of a bunch of backend stuff, for our crew and any attendees who wanted to watch, and since that had seemed well-received I decided to do a reprise around dinner-hour on Sunday and let people see it via Zoom or a stream to Youtube. It kinda worked but looked like ass in the detail, including in the Zoom cloud recording. But it was more to show the workflow and features and integrations rather than provide screenscrapes, so it was all good, and some other folks chimed in with more info about things like Airtable and other pieces that the con was using. So. Many. Knobs. My major takeaway is that I'm still a lousy public speaker, especially when slightly nervous and trying to follow notes. [Ask if you want a pointer; it may change depending on how post-editing goes.] ------------------------------

|

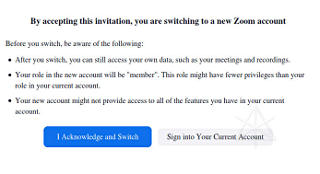

Aside: the Discord permissions matrix

Discord permissions are rich and versatile, and server admins frequently get it wrong. Managing the complexities of this was greatly helped by what I call a "lean matrix" approach, where the server baseline "@everyone" role has absolutely no inherent permissions to begin with. That makes for a fairly useless server at first, until we start building at the channel and category level using only positive permissions as much as possible and only where needed. The problem is that in Discord, an allow always overrides a deny as a user's net permissions map is added up, so it's hard to have a default-deny stance with the way most servers get set up. As soon as @everyone gets any inherent capabilities, it becomes much harder to negate those elsewhere -- the most notable case is the infamous "mute role", to disable someone's ability to send and speak. If some other role they have allows sending/speaking in a given channel, then an attempted specific deny role has no effect to shut them up! So for this server, I essentially had one global role to enable "input", and several of the more traditional ones for "output" and seeing channels at all. Temporarily killing a user's ability to interact across the entire server became as easy as removing their "input" role, without worrying about what other roles they had at the time. That's fairly easy to support with a couple of bot functions, and in this case was mostly for use by the Safety crew [or "Moderators" in other environments] who anticipated an occasional need to "pull someone aside" and have a conversation. But even the "input" ability was not given at the role level itself; rather, the role was allowed certain types of input out at the channel and category level. Why? Because you might want to allow posting pictures, using reactions, etc in some areas but not others -- again, if the "input role" had those inherent abilities on its own, now you have to scramble around trying to *deny* those additional capabilities across many other areas and hope that it might work. Have a read-only announcements channel? Simply don't add the general input role to it at all. The "positive only" approach is an easy way to have very granular control across your "spaces". In fact, as the convention server built up a largish stack of roles by the end, *none* of those roles had any permissions turned on, with one exception: the normal "Member" role that everyone received as they registered was allowed to change its nickname on the server. That was it, other than some of the necessary bot capabilities. But even the bots were limited to what they needed to do, only having base permissions such as "manage roles" to allow their gating functions to work. Despite that, we could give our staff certain power in small areas, because you can still do all that at the category/channel level. For instance, the folks who were going to manage access to the Kaffeeklatsches were permitted [by a special role] to "manage permissions" on precisely one channel: the audio / video channel for those small gatherings that people had to sign up for. With that, they could see the whole role stack, and enable only the "role of the hour" to find the channel. They could also enable any other role to see the channel, or even screw up the more permanent baseline permissions. It took careful training to teach a segment of our staff to use even that limited view into the administrative interface properly, partially because there seemed no easy path to drive it via bot functions. Another minor hack: the Safety staff was allowed to "manage messages" in their own public channel, so that they could delete errant messages from others that may have given away private information. Even those small things feel like a lot of power to the people they are granted to, and don't compromise any other parts of the server. The only real use of negative permissions was to make the registration related channels vanish out of the way once someone was fully "Membered up". That was only because @everyone had to be able to see them on initially joining the server, so they could enter their special tokens and complete the process. The "channel overrides" for those were a negative view/post permission for the normal "member" role, and it worked fine despite a little confusion as to why channels would appear and disappear across the process. But you get that pretty much anywhere on that type of role change, even if it's just the typical "I accept the rules" checkbox found on many other servers. Knowing such things happen is simply part of getting used to Discord. Probably more here than anyone wanted to read about it, but it really does take a while to absorb how this works. Primary takeaway: the lean approach worked really well and enabled easy handling of isolated special cases on the fly as needs developed. |